Director Configuration

Of all the configuration files needed to run Bareos, the Director’s is the most complicated and the one that you will need to modify the most often as you add clients or modify the FileSets.

For a general discussion of configuration files and resources including the recognized data types see Customizing the Configuration.

Everything revolves around a job and is tied to a job in one way or another.

The Bareos Director knows about following resource types:

Director Resource – to define the Director’s name and its access password used for authenticating the Console program. Only a single Director resource definition may appear in the Director’s configuration file.

Job Resource – to define the backup/restore Jobs and to tie together the Client, FileSet and Schedule resources to be used for each Job. Normally, you will Jobs of different names corresponding to each client (i.e. one Job per client, but a different one with a different name for each client).

JobDefs Resource – optional resource for providing defaults for Job resources.

Schedule Resource – to define when a Job has to run. You may have any number of Schedules, but each job will reference only one.

FileSet Resource – to define the set of files to be backed up for each Client. You may have any number of FileSets but each Job will reference only one.

Client Resource – to define what Client is to be backed up. You will generally have multiple Client definitions. Each Job will reference only a single client.

Storage Resource – to define on what physical device the Volumes should be mounted. You may have one or more Storage definitions.

Pool Resource – to define the pool of Volumes that can be used for a particular Job. Most people use a single default Pool. However, if you have a large number of clients or volumes, you may want to have multiple Pools. Pools allow you to restrict a Job (or a Client) to use only a particular set of Volumes.

Catalog Resource – to define in what database to keep the list of files and the Volume names where they are backed up. Most people only use a single catalog. It is possible, however not adviced and not supported to use multiple catalogs, see Multiple Catalogs.

Messages Resource – to define where error and information messages are to be sent or logged. You may define multiple different message resources and hence direct particular classes of messages to different users or locations (files, …).

Director Resource

The Director resource defines the attributes of the Directors running on the network. Only a single Director resource is allowed.

The following is an example of a valid Director resource definition:

configuration directive name |

type of data |

default value |

remark |

|---|---|---|---|

= |

|||

= |

no |

||

/usr/lib/bareos/backends (platform specific) |

|||

= |

|||

= |

9101 |

||

9101 |

|||

= |

9101 |

||

= |

0 |

||

= |

180 |

||

= |

0 |

||

= |

|||

= |

1 |

||

= |

30 |

deprecated |

|

= |

20 |

||

= |

|||

= |

required |

||

= |

4 |

||

|

= |

no |

|

= |

|||

= |

no |

||

= |

no |

||

required |

|||

deprecated |

|||

required |

|||

= |

1800 |

||

= |

|||

= |

0 |

deprecated |

|

= |

160704000 |

deprecated |

|

= |

0 |

||

= |

no |

||

= |

yes |

||

= |

|||

= |

no |

||

= |

no |

||

= |

|||

/var/lib/bareos (platform specific) |

- Audit Events

- Type:

- Since Version:

14.2.0

Specify which commands (see Console Commands) will be audited. If nothing is specified (and

Auditing (Dir->Director)is enabled), all commands will be audited.

- Auditing

- Type:

- Default value:

no

- Since Version:

14.2.0

This directive allows to en- or disable auditing of interaction with the Bareos Director. If enabled, audit messages will be generated. The messages resource configured in

Messages (Dir->Director)defines, how these messages are handled.

- Backend Directory

- Type:

- Default value:

/usr/lib/bareos/backends (platform specific)

This directive specifies a list of directories from where the Bareos Director loads his dynamic backends.

- Description

- Type:

The text field contains a description of the Director that will be displayed in the graphical user interface. This directive is optional.

- Dir Address

- Type:

- Default value:

9101

This directive is optional, but if it is specified, it will cause the Director server (for the Console program) to bind to the specified address. If this and the

Dir Addresses (Dir->Director)directives are not specified, the Director will bind to both IPv6 and IPv4 default addresses (the default).

- Dir Addresses

- Type:

- Default value:

9101

Specify the ports and addresses on which the Director daemon will listen for Bareos Console connections.

Please note that if you use the

Dir Addresses (Dir->Director)directive, you must not use either aDir Port (Dir->Director)or aDir Address (Dir->Director)directive in the same resource.

- Dir Port

- Type:

- Default value:

9101

Specify the port on which the Director daemon will listen for Bareos Console connections. This same port number must be specified in the Director resource of the Console configuration file. This directive should not be used if you specify

Dir Addresses (Dir->Director)(N.B plural) directive.By default, the Director will listen to both IPv6 and IPv4 default addresses on the port you set. If you want to listen on either IPv4 or IPv6 only, you have to specify it with either

Dir Address (Dir->Director), or removeDir Port (Dir->Director)and just useDir Addresses (Dir->Director)instead.

- Dir Source Address

- Type:

- Default value:

0

This record is optional, and if it is specified, it will cause the Director server (when initiating connections to a storage or file daemon) to source its connections from the specified address. Only a single IP address may be specified. If this record is not specified, the Director server will source its outgoing connections according to the system routing table (the default).

- FD Connect Timeout

- Type:

- Default value:

180

where time is the time that the Director should continue attempting to contact the File daemon to start a job, and after which the Director will cancel the job.

- Heartbeat Interval

- Type:

- Default value:

0

This directive is optional and if specified will cause the Director to set a keepalive interval (heartbeat) in seconds on each of the sockets it opens for the Client resource. This value will override any specified at the Director level. It is implemented only on systems that provide the setsockopt TCP_KEEPIDLE function (Linux, …). The default value is zero, which means no change is made to the socket.

- Key Encryption Key

- Type:

This key is used to encrypt the Security Key that is exchanged between the Director and the Storage Daemon for supporting Application Managed Encryption (AME). For security reasons each Director should have a different Key Encryption Key.

- Maximum Concurrent Jobs

- Type:

- Default value:

1

This directive specifies the maximum number of total Director Jobs that should run concurrently.

The Volume format becomes more complicated with multiple simultaneous jobs, consequently, restores may take longer if Bareos must sort through interleaved volume blocks from multiple simultaneous jobs. This can be avoided by having each simultaneous job write to a different volume or by using data spooling, which will first spool the data to disk simultaneously, then write one spool file at a time to the volume thus avoiding excessive interleaving of the different job blocks.

See also the section about Concurrent Jobs.

- Maximum Connections

- Type:

- Default value:

30

- Since Version:

deprecated

This directive has been deprecated and has no effect.

- Maximum Console Connections

- Type:

- Default value:

20

This directive specifies the maximum number of Console Connections that could run concurrently.

- Messages

- Type:

The messages resource specifies where to deliver Director messages that are not associated with a specific Job. Most messages are specific to a job and will be directed to the Messages resource specified by the job. However, there are a messages that can occur when no job is running.

- Name

- Required:

True

- Type:

The name of the resource.

The director name used by the system administrator.

- NDMP Log Level

- Type:

- Default value:

4

- Since Version:

13.2.0

This directive sets the loglevel for the NDMP protocol library.

- NDMP Namelist Fhinfo Set Zero For Invalid Uquad

- Type:

- Default value:

no

- Since Version:

20.0.6

This directive enables a bug workaround for Isilon 9.1.0.0 systems where the NDMP namelists tape offset (also known as fhinfo) is sanity checked resulting in valid value -1 being no more accepted. The Isilon system sends the following error message: ‘Invalid nlist.tape_offset -1 at index 1 - tape offset not aligned at 512B boundary’. The workaround sends 0 instead of -1 which is accepted by the Isilon system and enables normal operation again.

- NDMP Snooping

- Type:

- Since Version:

13.2.0

This directive enables the Snooping and pretty printing of NDMP protocol information in debugging mode.

- Optimize For Size

- Type:

- Default value:

no

If set to yes this directive will use the optimizations for memory size over speed. So it will try to use less memory which may lead to a somewhat lower speed. Its currently mostly used for keeping all hardlinks in memory.

If none of

Optimize For Size (Dir->Director)andOptimize For Speed (Dir->Director)is enabled,Optimize For Size (Dir->Director)is enabled by default.

- Optimize For Speed

- Type:

- Default value:

no

If set to yes this directive will use the optimizations for speed over the memory size. So it will try to use more memory which lead to a somewhat higher speed. Its currently mostly used for keeping all hardlinks in memory. Its relates to the

Optimize For Size (Dir->Director)option set either one to yes as they are mutually exclusive.

- Password

- Required:

True

- Type:

Specifies the password that must be supplied for the default Bareos Console to be authorized. This password correspond to

Password (Console->Director)of the Console configuration file.The password is plain text.

- Pid Directory

- Type:

- Since Version:

deprecated

Since Version >= 21.0.0 this directive has no effect anymore. The way to set up a pid file is to do it as an option to the Director binary with the

-p <file>option, where <file> is the path to a pidfile of your choosing. By default, no pidfile is created.

- Plugin Directory

- Type:

- Since Version:

14.2.0

Plugins are loaded from this directory. To load only specific plugins, use ‘Plugin Names’.

- Plugin Names

- Type:

- Since Version:

14.2.0

List of plugins, that should get loaded from ‘Plugin Directory’ (only basenames, ‘-dir.so’ is added automatically). If empty, all plugins will get loaded.

- Query File

- Required:

True

- Type:

This directive is required and specifies a directory and file in which the Director can find the canned SQL statements for the query command.

- SD Connect Timeout

- Type:

- Default value:

1800

where time is the time that the Director should continue attempting to contact the Storage daemon to start a job, and after which the Director will cancel the job.

- Secure Erase Command

- Type:

- Since Version:

15.2.1

Specify command that will be called when bareos unlinks files.

When files are no longer needed, Bareos will delete (unlink) them. With this directive, it will call the specified command to delete these files. See Secure Erase Command for details.

- Statistics Collect Interval

- Type:

- Default value:

0

- Since Version:

deprecated

Bareos offers the possibility to collect statistic information from its connected devices. To do so,

Collect Statistics (Dir->Storage)must be enabled. This interval defines, how often the Director connects to the attached Storage Daemons to collect the statistic information.

- Statistics Retention

- Type:

- Default value:

160704000

- Since Version:

deprecated

The Statistics Retention directive defines the length of time that Bareos will keep statistics job records in the Catalog database after the Job End time (in the catalog JobHisto table). When this time period expires, and if user runs prune stats command, Bareos will prune (remove) Job records that are older than the specified period.

Theses statistics records aren’t use for restore purpose, but mainly for capacity planning, billings, etc. See chapter Job Statistics for additional information.

- Subscriptions

- Type:

- Default value:

0

- Since Version:

12.4.4

In case you want check that the number of active clients don’t exceed a specific number, you can define this number here and check with the status subscriptions command.

However, this is only intended to give a hint. No active limiting is implemented.

- TLS Allowed CN

- Type:

“Common Name”s (CNs) of the allowed peer certificates.

- TLS DH File

- Type:

Path to PEM encoded Diffie-Hellman parameter file. If this directive is specified, DH key exchange will be used for the ephemeral keying, allowing for forward secrecy of communications.

- TLS Enable

- Type:

- Default value:

yes

Enable TLS support.

Bareos can be configured to encrypt all its network traffic. See chapter TLS Configuration Directives to see, how the Bareos Director (and the other components) must be configured to use TLS.

- TLS Key

- Type:

Path of a PEM encoded private key. It must correspond to the specified “TLS Certificate”.

- TLS Require

- Type:

- Default value:

no

Without setting this to yes, Bareos can fall back to use unencrypted connections. Enabling this implicitly sets “TLS Enable = yes”.

- TLS Verify Peer

- Type:

- Default value:

no

If disabled, all certificates signed by a known CA will be accepted. If enabled, the CN of a certificate must the Address or in the “TLS Allowed CN” list.

- Ver Id

- Type:

where string is an identifier which can be used for support purpose. This string is displayed using the version command.

- Working Directory

- Type:

- Default value:

/var/lib/bareos (platform specific)

This directive is optional and specifies a directory in which the Director may put its status files. This directory should be used only by Bareos but may be shared by other Bareos daemons. Standard shell expansion of the directory is done when the configuration file is read so that values such as

$HOMEwill be properly expanded.

The working directory specified must already exist and be readable and writable by the Bareos daemon referencing it.

Job Resource

The Job resource defines a Job (Backup, Restore, …) that Bareos must perform. Each Job resource definition contains the name of a Client and a FileSet to backup, the Schedule for the Job, where the data are to be stored, and what media Pool can be used. In effect, each Job resource must specify What, Where, How, and When or FileSet, Storage, Backup/Restore/Level, and Schedule respectively. Note, the FileSet must be specified for a restore job for historical reasons, but it is no longer used.

Only a single type (Backup, Restore, …) can be specified for any job. If you want to backup multiple FileSets on the same Client or multiple Clients, you must define a Job for each one.

Note, you define only a single Job to do the Full, Differential, and Incremental backups since the different backup levels are tied together by a unique Job name. Normally, you will have only one Job per Client, but if a client has a really huge number of files (more than several million), you might want to split it into several Jobs each with a different FileSet covering only parts of the total files.

Multiple Storage daemons are not currently supported for Jobs, if you do want to use multiple storage daemons, you will need to create a different Job and ensure the combination of Client and FileSet is unique.

Warning

Bareos uses only Client (Dir->Job) and File Set (Dir->Job) to determine which jobids belong together.

If job A and B have the same client and fileset defined, the resulting jobids will be intermixed as follows:

When a job determines its predecessor to determine its required level and since-time, it will consider all jobs with the same client and fileset.

When restoring a client you select the fileset and all jobs that used that fileset will be considered.

As a matter of fact, if you want separate backups, you have to duplicate your filesets with a different name and the same content.

configuration directive name |

type of data |

default value |

remark |

|---|---|---|---|

= |

no |

||

= |

|||

= |

|||

= |

yes |

||

= |

yes |

||

= |

no |

||

= |

no |

||

= |

0 |

||

= |

0 |

||

= |

|||

= |

Native |

||

= |

no |

||

= |

no |

||

= |

no |

||

= |

|||

= |

|||

= |

|||

= |

|||

= |

|||

= |

yes |

||

= |

10000000 |

||

= |

|||

= |

|||

= |

|||

= |

|||

= |

|||

= |

|||

= |

|||

= |

100 |

||

= |

|||

= |

0 |

||

= |

|||

= |

|||

= |

|||

= |

|||

= |

|||

= |

|||

= |

|||

= |

1 |

||

= |

required |

||

= |

required |

||

= |

|||

= |

required |

||

= |

yes |

||

= |

no |

||

= |

10 |

||

Native |

|||

= |

no |

||

= |

no |

||

= |

no |

||

= |

no |

||

= |

|||

Always |

|||

= |

no |

||

= |

1800 |

||

= |

no |

||

= |

5 |

||

= |

0 |

||

{ |

|||

= |

yes |

||

= |

|||

= |

|||

= |

no |

||

= |

no |

||

= |

|||

= |

|||

= |

required |

||

= |

alias |

||

= |

|||

- Accurate

- Type:

- Default value:

no

In accurate mode, the File daemon knowns exactly which files were present after the last backup. So it is able to handle deleted or renamed files.

When restoring a FileSet for a specified date (including “most recent”), Bareos is able to restore exactly the files and directories that existed at the time of the last backup prior to that date including ensuring that deleted files are actually deleted, and renamed directories are restored properly.

When doing VirtualFull backups, it is advised to use the accurate mode, otherwise the VirtualFull might contain already deleted files.

However, using the accurate mode has also disadvantages:

The File daemon must keep data concerning all files in memory. So If you do not have sufficient memory, the backup may either be terribly slow or fail. For 500.000 files (a typical desktop linux system), it will require approximately 64 Megabytes of RAM on your File daemon to hold the required information.

- Add Prefix

- Type:

This directive applies only to a Restore job and specifies a prefix to the directory name of all files being restored. This will use File Relocation feature.

- Add Suffix

- Type:

This directive applies only to a Restore job and specifies a suffix to all files being restored. This will use File Relocation feature.

Using

Add Suffix=.old,/etc/passwdwill be restored to/etc/passwsd.old

- Allow Duplicate Jobs

- Type:

- Default value:

yes

Allow Duplicate Jobs usage

A duplicate job in the sense we use it here means a second or subsequent job with the same name starts. This happens most frequently when the first job runs longer than expected because no tapes are available.

If this directive is enabled duplicate jobs will be run. If the directive is set to no then only one job of a given name may run at one time. The action that Bareos takes to ensure only one job runs is determined by the directives

If none of these directives is set to yes, Allow Duplicate Jobs is set to no and two jobs are present, then the current job (the second one started) will be cancelled.

Virtual backup jobs of a consolidation are not affected by the directive. In those cases the directive is going to be ignored.

- Allow Mixed Priority

- Type:

- Default value:

no

When set to yes, this job may run even if lower priority jobs are already running. This means a high priority job will not have to wait for other jobs to finish before starting. The scheduler will only mix priorities when all running jobs have this set to true.

Note that only higher priority jobs will start early. Suppose the director will allow two concurrent jobs, and that two jobs with priority 10 are running, with two more in the queue. If a job with priority 5 is added to the queue, it will be run as soon as one of the running jobs finishes. However, new priority 10 jobs will not be run until the priority 5 job has finished.

- Always Incremental

- Type:

- Default value:

no

- Since Version:

16.2.4

Enable/disable always incremental backup scheme.

- Always Incremental Job Retention

- Type:

- Default value:

0

- Since Version:

16.2.4

Backup Jobs older than the specified time duration will be merged into a new Virtual backup.

- Always Incremental Keep Number

- Type:

- Default value:

0

- Since Version:

16.2.4

Guarantee that at least the specified number of Backup Jobs will persist, even if they are older than “Always Incremental Job Retention”.

- Always Incremental Max Full Age

- Type:

- Since Version:

16.2.4

If “AlwaysIncrementalMaxFullAge” is set, during consolidations only incremental backups will be considered while the Full Backup remains to reduce the amount of data being consolidated. Only if the Full Backup is older than “AlwaysIncrementalMaxFullAge”, the Full Backup will be part of the consolidation to avoid the Full Backup becoming too old .

- Backup Format

- Type:

- Default value:

Native

The backup format used for protocols which support multiple formats. By default, it uses the Bareos Native Backup format. Other protocols, like NDMP supports different backup formats for instance:

Dump

Tar

SMTape

- Base

- Type:

The Base directive permits to specify the list of jobs that will be used during Full backup as base. This directive is optional. See the Base Job chapter for more information.

- Bootstrap

- Type:

The Bootstrap directive specifies a bootstrap file that, if provided, will be used during Restore Jobs and is ignored in other Job types. The bootstrap file contains the list of tapes to be used in a restore Job as well as which files are to be restored. Specification of this directive is optional, and if specified, it is used only for a restore job. In addition, when running a Restore job from the console, this value can be changed.

If you use the restore command in the Console program, to start a restore job, the bootstrap file will be created automatically from the files you select to be restored.

For additional details see The Bootstrap File chapter.

- Cancel Lower Level Duplicates

- Type:

- Default value:

no

If

Allow Duplicate Jobs (Dir->Job)is set to no and this directive is set to yes, Bareos will choose between duplicated jobs the one with the highest level. For example, it will cancel a previous Incremental to run a Full backup. It works only for Backup jobs. If the levels of the duplicated jobs are the same, nothing is done and the directivesCancel Queued Duplicates (Dir->Job)andCancel Running Duplicates (Dir->Job)will be examined.

- Cancel Queued Duplicates

- Type:

- Default value:

no

If

Allow Duplicate Jobs (Dir->Job)is set to no and if this directive is set to yes any job that is already queued to run but not yet running will be canceled.

- Cancel Running Duplicates

- Type:

- Default value:

no

If

Allow Duplicate Jobs (Dir->Job)is set to no and if this directive is set to yes any job that is already running will be canceled.

- Catalog

- Type:

- Since Version:

13.4.0

This specifies the name of the catalog resource to be used for this Job. When a catalog is defined in a Job it will override the definition in the client.

- Client

- Type:

The Client directive specifies the Client (File daemon) that will be used in the current Job. Only a single Client may be specified in any one Job. The Client runs on the machine to be backed up, and sends the requested files to the Storage daemon for backup, or receives them when restoring. For additional details, see the Client Resource of this chapter. This directive is required For versions before 13.3.0, this directive is required for all Jobtypes. For Version >= 13.3.0 it is required for all Jobtypes but Copy or Migrate jobs.

- Client Run After Job

- Type:

This is a shortcut for the

Run Script (Dir->Job)resource, that run on the client after a backup job.

- Client Run Before Job

- Type:

This is basically a shortcut for the

Run Script (Dir->Job)resource, that run on the client before the backup job.Warning

For compatibility reasons, with this shortcut, the command is executed directly when the client receive it. And if the command is in error, other remote runscripts will be discarded. To be sure that all commands will be sent and executed, you have to use

Run Script (Dir->Job)syntax.

- Differential Backup Pool

- Type:

The Differential Backup Pool specifies a Pool to be used for Differential backups. It will override any

Pool (Dir->Job)specification during a Differential backup.

- Differential Max Runtime

- Type:

The time specifies the maximum allowed time that a Differential backup job may run, counted from when the job starts (not necessarily the same as when the job was scheduled).

- Dir Plugin Options

- Type:

These settings are plugin specific, see Director Plugins.

- Enabled

- Type:

- Default value:

yes

En- or disable this resource.

This directive allows you to enable or disable automatic execution via the scheduler of a Job.

- FD Plugin Options

- Type:

These settings are plugin specific, see File Daemon Plugins.

- File History Size

- Type:

- Default value:

10000000

- Since Version:

15.2.4

When using NDMP and

Save File History (Dir->Job)is enabled, this directives controls the size of the internal temporary database (LMDB) to translate NDMP file and directory information into Bareos file and directory information.File History Size must be greater the number of directories + files of this NDMP backup job.

Warning

This uses a large memory mapped file (File History Size * 256 => around 2,3 GB for the File History Size = 10000000). On 32-bit systems or if a memory limit for the user running the Bareos Director (normally bareos) exists (verify by su - bareos -s /bin/sh -c "ulimit -a"), this may fail.

- File Set

- Type:

The FileSet directive specifies the FileSet that will be used in the current Job. The FileSet specifies which directories (or files) are to be backed up, and what options to use (e.g. compression, …). Only a single FileSet resource may be specified in any one Job. For additional details, see the FileSet Resource section of this chapter. This directive is required (For versions before 13.3.0 for all Jobtypes and for versions after that for all Jobtypes but Copy and Migrate).

- Full Backup Pool

- Type:

The Full Backup Pool specifies a Pool to be used for Full backups. It will override any

Pool (Dir->Job)specification during a Full backup.

- Full Max Runtime

- Type:

The time specifies the maximum allowed time that a Full backup job may run, counted from when the job starts (not necessarily the same as when the job was scheduled).

- Incremental Backup Pool

- Type:

The Incremental Backup Pool specifies a Pool to be used for Incremental backups. It will override any

Pool (Dir->Job)specification during an Incremental backup.

- Incremental Max Runtime

- Type:

The time specifies the maximum allowed time that an Incremental backup job may run, counted from when the job starts, (not necessarily the same as when the job was scheduled).

- Job Defs

- Type:

If a Job Defs resource name is specified, all the values contained in the named Job Defs resource will be used as the defaults for the current Job. Any value that you explicitly define in the current Job resource, will override any defaults specified in the Job Defs resource. The use of this directive permits writing much more compact Job resources where the bulk of the directives are defined in one or more Job Defs. This is particularly useful if you have many similar Jobs but with minor variations such as different Clients. To structure the configuration even more, Job Defs themselves can also refer to other Job Defs.

- Level

- Type:

The Level directive specifies the default Job level to be run. Each different

Type (Dir->Job)(Backup, Restore, Verify, …) has a different set of Levels that can be specified. The Level is normally overridden by a different value that is specified in the Schedule Resource. This directive is not required, but must be specified either by this directive or as an override specified in the Schedule Resource.- Backup

For a Backup Job, the Level may be one of the following:

- Full

When the Level is set to Full all files in the FileSet whether or not they have changed will be backed up.

- Incremental

When the Level is set to Incremental all files specified in the FileSet that have changed since the last successful backup of the the same Job using the same FileSet and Client, will be backed up. If the Director cannot find a previous valid Full backup then the job will be upgraded into a Full backup. When the Director looks for a valid backup record in the catalog database, it looks for a previous Job with:

The same Job name.

The same Client name.

The same FileSet (any change to the definition of the FileSet such as adding or deleting a file in the Include or Exclude sections constitutes a different FileSet.

The Job was a Full, Differential, or Incremental backup.

The Job terminated normally (i.e. did not fail or was not canceled).

The Job started no longer ago than Max Full Interval.

If all the above conditions do not hold, the Director will upgrade the Incremental to a Full save. Otherwise, the Incremental backup will be performed as requested.

The File daemon (Client) decides which files to backup for an Incremental backup by comparing start time of the prior Job (Full, Differential, or Incremental) against the time each file was last “modified” (st_mtime) and the time its attributes were last “changed”(st_ctime). If the file was modified or its attributes changed on or after this start time, it will then be backed up.

Some virus scanning software may change st_ctime while doing the scan. For example, if the virus scanning program attempts to reset the access time (st_atime), which Bareos does not use, it will cause st_ctime to change and hence Bareos will backup the file during an Incremental or Differential backup. In the case of Sophos virus scanning, you can prevent it from resetting the access time (st_atime) and hence changing st_ctime by using the –no-reset-atime option. For other software, please see their manual.

When Bareos does an Incremental backup, all modified files that are still on the system are backed up. However, any file that has been deleted since the last Full backup remains in the Bareos catalog, which means that if between a Full save and the time you do a restore, some files are deleted, those deleted files will also be restored. The deleted files will no longer appear in the catalog after doing another Full save.

In addition, if you move a directory rather than copy it, the files in it do not have their modification time (st_mtime) or their attribute change time (st_ctime) changed. As a consequence, those files will probably not be backed up by an Incremental or Differential backup which depend solely on these time stamps. If you move a directory, and wish it to be properly backed up, it is generally preferable to copy it, then delete the original.

However, to manage deleted files or directories changes in the catalog during an Incremental backup you can use Accurate mode. This is quite memory consuming process.

- Differential

When the Level is set to Differential all files specified in the FileSet that have changed since the last successful Full backup of the same Job will be backed up. If the Director cannot find a valid previous Full backup for the same Job, FileSet, and Client, backup, then the Differential job will be upgraded into a Full backup. When the Director looks for a valid Full backup record in the catalog database, it looks for a previous Job with:

The same Job name.

The same Client name.

The same FileSet (any change to the definition of the FileSet such as adding or deleting a file in the Include or Exclude sections constitutes a different FileSet.

The Job was a FULL backup.

The Job terminated normally (i.e. did not fail or was not canceled).

The Job started no longer ago than Max Full Interval.

If all the above conditions do not hold, the Director will upgrade the Differential to a Full save. Otherwise, the Differential backup will be performed as requested.

The File daemon (Client) decides which files to backup for a differential backup by comparing the start time of the prior Full backup Job against the time each file was last “modified” (st_mtime) and the time its attributes were last “changed” (st_ctime). If the file was modified or its attributes were changed on or after this start time, it will then be backed up. The start time used is displayed after the Since on the Job report. In rare cases, using the start time of the prior backup may cause some files to be backed up twice, but it ensures that no change is missed.

When Bareos does a Differential backup, all modified files that are still on the system are backed up. However, any file that has been deleted since the last Full backup remains in the Bareos catalog, which means that if between a Full save and the time you do a restore, some files are deleted, those deleted files will also be restored. The deleted files will no longer appear in the catalog after doing another Full save. However, to remove deleted files from the catalog during a Differential backup is quite a time consuming process and not currently implemented in Bareos. It is, however, a planned future feature.

As noted above, if you move a directory rather than copy it, the files in it do not have their modification time (st_mtime) or their attribute change time (st_ctime) changed. As a consequence, those files will probably not be backed up by an Incremental or Differential backup which depend solely on these time stamps. If you move a directory, and wish it to be properly backed up, it is generally preferable to copy it, then delete the original. Alternatively, you can move the directory, then use the touch program to update the timestamps.

However, to manage deleted files or directories changes in the catalog during an Differential backup you can use Accurate mode. This is quite memory consuming process. See for more details.

Every once and a while, someone asks why we need Differential backups as long as Incremental backups pickup all changed files. There are possibly many answers to this question, but the one that is the most important for me is that a Differential backup effectively merges all the Incremental and Differential backups since the last Full backup into a single Differential backup. This has two effects: 1. It gives some redundancy since the old backups could be used if the merged backup cannot be read. 2. More importantly, it reduces the number of Volumes that are needed to do a restore effectively eliminating the need to read all the volumes on which the preceding Incremental and Differential backups since the last Full are done.

- VirtualFull

When the Level is set to VirtualFull, a new Full backup is generated from the last existing Full backup and the matching Differential- and Incremental-Backups. It matches this according the

Name (Dir->Client)andName (Dir->Fileset). This means, a new Full backup get created without transfering all the data from the client to the backup server again. The new Full backup will be stored in the pool defined inNext Pool (Dir->Pool).Warning

Opposite to the other backup levels, VirtualFull may require read and write access to multiple volumes. In most cases you have to make sure, that Bareos does not try to read and write to the same Volume.

- Restore

For a Restore Job, no level needs to be specified.

- Verify

For a Verify Job, the Level may be one of the following:

- InitCatalog

does a scan of the specified FileSet and stores the file attributes in the Catalog database. Since no file data is saved, you might ask why you would want to do this. It turns out to be a very simple and easy way to have a Tripwire like feature using Bareos. In other words, it allows you to save the state of a set of files defined by the FileSet and later check to see if those files have been modified or deleted and if any new files have been added. This can be used to detect system intrusion. Typically you would specify a FileSet that contains the set of system files that should not change (e.g. /sbin, /boot, /lib, /bin, …). Normally, you run the InitCatalog level verify one time when your system is first setup, and then once again after each modification (upgrade) to your system. Thereafter, when your want to check the state of your system files, you use a Verify level = Catalog. This compares the results of your InitCatalog with the current state of the files.

- Catalog

Compares the current state of the files against the state previously saved during an InitCatalog. Any discrepancies are reported. The items reported are determined by the verify options specified on the Include directive in the specified FileSet (see the FileSet resource below for more details). Typically this command will be run once a day (or night) to check for any changes to your system files.

Warning

If you run two Verify Catalog jobs on the same client at the same time, the results will certainly be incorrect. This is because Verify Catalog modifies the Catalog database while running in order to track new files.

- VolumeToCatalog

This level causes Bareos to read the file attribute data written to the Volume from the last backup Job for the job specified on the VerifyJob directive. The file attribute data are compared to the values saved in the Catalog database and any differences are reported. This is similar to the DiskToCatalog level except that instead of comparing the disk file attributes to the catalog database, the attribute data written to the Volume is read and compared to the catalog database. Although the attribute data including the signatures (MD5 or SHA1) are compared, the actual file data is not compared (it is not in the catalog).

VolumeToCatalog jobs require a client to extract the metadata, but this client does not have to be the original client. We suggest to use the client on the backup server itself for maximum performance.

Warning

If you run two Verify VolumeToCatalog jobs on the same client at the same time, the results will certainly be incorrect. This is because the Verify VolumeToCatalog modifies the Catalog database while running.

Limitation: Verify VolumeToCatalog does not check file checksums

When running a Verify VolumeToCatalog job the file data will not be checksummed and compared with the recorded checksum. As a result, file data errors that are introduced between the checksumming in the Bareos File Daemon and the checksumming of the block by the Bareos Storage Daemon will not be detected.

- DiskToCatalog

This level causes Bareos to read the files as they currently are on disk, and to compare the current file attributes with the attributes saved in the catalog from the last backup for the job specified on the VerifyJob directive. This level differs from the VolumeToCatalog level described above by the fact that it doesn’t compare against a previous Verify job but against a previous backup. When you run this level, you must supply the verify options on your Include statements. Those options determine what attribute fields are compared.

This command can be very useful if you have disk problems because it will compare the current state of your disk against the last successful backup, which may be several jobs.

Note, the current implementation does not identify files that have been deleted.

- Max Diff Interval

- Type:

The time specifies the maximum allowed age (counting from start time) of the most recent successful Differential backup that is required in order to run Incremental backup jobs. If the most recent Differential backup is older than this interval, Incremental backups will be upgraded to Differential backups automatically. If this directive is not present, or specified as 0, then the age of the previous Differential backup is not considered.

- Max Full Consolidations

- Type:

- Default value:

0

- Since Version:

16.2.4

If “AlwaysIncrementalMaxFullAge” is configured, do not run more than “MaxFullConsolidations” consolidation jobs that include the Full backup.

- Max Full Interval

- Type:

The time specifies the maximum allowed age (counting from start time) of the most recent successful Full backup that is required in order to run Incremental or Differential backup jobs. If the most recent Full backup is older than this interval, Incremental and Differential backups will be upgraded to Full backups automatically. If this directive is not present, or specified as 0, then the age of the previous Full backup is not considered.

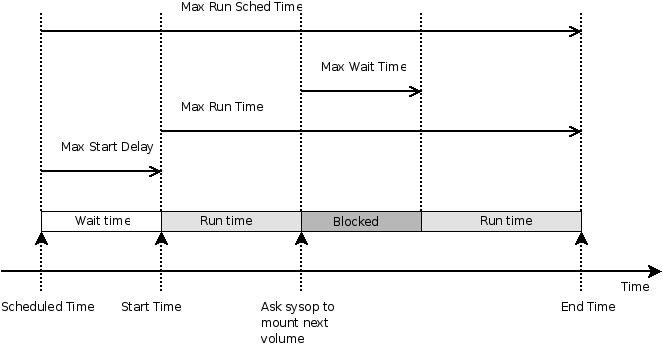

- Max Run Sched Time

- Type:

The time specifies the maximum allowed time that a job may run, counted from when the job was scheduled. This can be useful to prevent jobs from running during working hours. We can see it like

Max Start Delay + Max Run Time.

- Max Run Time

- Type:

The time specifies the maximum allowed time that a job may run, counted from when the job starts, (not necessarily the same as when the job was scheduled).

By default, the watchdog thread will kill any Job that has run more than 6 days. The maximum watchdog timeout is independent of Max Run Time and cannot be changed.

- Max Start Delay

- Type:

The time specifies the maximum delay between the scheduled time and the actual start time for the Job. For example, a job can be scheduled to run at 1:00am, but because other jobs are running, it may wait to run. If the delay is set to 3600 (one hour) and the job has not begun to run by 2:00am, the job will be canceled. This can be useful, for example, to prevent jobs from running during day time hours. The default is no limit.

- Max Virtual Full Interval

- Type:

- Since Version:

14.4.0

The time specifies the maximum allowed age (counting from start time) of the most recent successful Virtual Full backup that is required in order to run Incremental or Differential backup jobs. If the most recent Virtual Full backup is older than this interval, Incremental and Differential backups will be upgraded to Virtual Full backups automatically. If this directive is not present, or specified as 0, then the age of the previous Virtual Full backup is not considered.

- Max Wait Time

- Type:

The time specifies the maximum allowed time that a job may block waiting for a resource (such as waiting for a tape to be mounted, or waiting for the storage or file daemons to perform their duties), counted from the when the job starts, (not necessarily the same as when the job was scheduled).

Job time control directives

- Maximum Bandwidth

- Type:

The speed parameter specifies the maximum allowed bandwidth that a job may use.

- Maximum Concurrent Jobs

- Type:

- Default value:

1

Specifies the maximum number of Jobs from the current Job resource that can run concurrently. Note, this directive limits only Jobs with the same name as the resource in which it appears. Any other restrictions on the maximum concurrent jobs such as in the Director, Client or Storage resources will also apply in addition to the limit specified here.

For details, see the Concurrent Jobs chapter.

- Messages

- Required:

True

- Type:

The Messages directive defines what Messages resource should be used for this job, and thus how and where the various messages are to be delivered. For example, you can direct some messages to a log file, and others can be sent by email. For additional details, see the Messages Resource Chapter of this manual. This directive is required.

- Name

- Required:

True

- Type:

The name of the resource.

The Job name. This name can be specified on the Run command in the console program to start a job. If the name contains spaces, it must be specified between quotes. It is generally a good idea to give your job the same name as the Client that it will backup. This permits easy identification of jobs.

When the job actually runs, the unique Job Name will consist of the name you specify here followed by the date and time the job was scheduled for execution.

It is recommended to limit job names to 98 characters. Higher is always possible, but when the job is run, its name will be truncated to accomodate certain protocol limitations, as well as the above mentioned date and time.

- Pool

- Required:

True

- Type:

The Pool directive defines the pool of Volumes where your data can be backed up. Many Bareos installations will use only the Default pool. However, if you want to specify a different set of Volumes for different Clients or different Jobs, you will probably want to use Pools. For additional details, see the Pool Resource of this chapter. This directive is required.

In case of a Copy or Migration job, this setting determines what Pool will be examined for finding JobIds to migrate. The exception to this is when

Selection Type (Dir->Job)= SQLQuery, and although a Pool directive must still be specified, no Pool is used, unless you specifically include it in the SQL query. Note, in any case, the Pool resource defined by the Pool directive must contain aNext Pool (Dir->Pool)= … directive to define the Pool to which the data will be migrated.

- Prefer Mounted Volumes

- Type:

- Default value:

yes

If the Prefer Mounted Volumes directive is set to yes, the Storage daemon is requested to select either an Autochanger or a drive with a valid Volume already mounted in preference to a drive that is not ready. This means that all jobs will attempt to append to the same Volume (providing the Volume is appropriate – right Pool, … for that job), unless you are using multiple pools. If no drive with a suitable Volume is available, it will select the first available drive. Note, any Volume that has been requested to be mounted, will be considered valid as a mounted volume by another job. This if multiple jobs start at the same time and they all prefer mounted volumes, the first job will request the mount, and the other jobs will use the same volume.

If the directive is set to no, the Storage daemon will prefer finding an unused drive, otherwise, each job started will append to the same Volume (assuming the Pool is the same for all jobs). Setting Prefer Mounted Volumes to no can be useful for those sites with multiple drive autochangers that prefer to maximize backup throughput at the expense of using additional drives and Volumes. This means that the job will prefer to use an unused drive rather than use a drive that is already in use.

Despite the above, we recommend against setting this directive to no since it tends to add a lot of swapping of Volumes between the different drives and can easily lead to deadlock situations in the Storage daemon. We will accept bug reports against it, but we cannot guarantee that we will be able to fix the problem in a reasonable time.

A better alternative for using multiple drives is to use multiple pools so that Bareos will be forced to mount Volumes from those Pools on different drives.

- Prefix Links

- Type:

- Default value:

no

If a Where path prefix is specified for a recovery job, apply it to absolute links as well. The default is No. When set to Yes then while restoring files to an alternate directory, any absolute soft links will also be modified to point to the new alternate directory. Normally this is what is desired – i.e. everything is self consistent. However, if you wish to later move the files to their original locations, all files linked with absolute names will be broken.

- Priority

- Type:

- Default value:

10

This directive permits you to control the order in which your jobs will be run by specifying a positive non-zero number. The higher the number, the lower the job priority. Assuming you are not running concurrent jobs, all queued jobs of priority 1 will run before queued jobs of priority 2 and so on, regardless of the original scheduling order.

The priority only affects waiting jobs that are queued to run, not jobs that are already running. If one or more jobs of priority 2 are already running, and a new job is scheduled with priority 1, the currently running priority 2 jobs must complete before the priority 1 job is run, unless Allow Mixed Priority is set.

If you want to run concurrent jobs you should keep these points in mind:

See Concurrent Jobs on how to setup concurrent jobs.

Bareos concurrently runs jobs of only one priority at a time. It will not simultaneously run a priority 1 and a priority 2 job.

If Bareos is running a priority 2 job and a new priority 1 job is scheduled, it will wait until the running priority 2 job terminates even if the Maximum Concurrent Jobs settings would otherwise allow two jobs to run simultaneously.

Suppose that bareos is running a priority 2 job and a new priority 1 job is scheduled and queued waiting for the running priority 2 job to terminate. If you then start a second priority 2 job, the waiting priority 1 job will prevent the new priority 2 job from running concurrently with the running priority 2 job. That is: as long as there is a higher priority job waiting to run, no new lower priority jobs will start even if the Maximum Concurrent Jobs settings would normally allow them to run. This ensures that higher priority jobs will be run as soon as possible.

If you have several jobs of different priority, it may not best to start them at exactly the same time, because Bareos must examine them one at a time. If by Bareos starts a lower priority job first, then it will run before your high priority jobs. If you experience this problem, you may avoid it by starting any higher priority jobs a few seconds before lower priority ones. This insures that Bareos will examine the jobs in the correct order, and that your priority scheme will be respected.

- Protocol

- Type:

- Default value:

Native

The backup protocol to use to run the Job. See dtProtocolType.

- Prune Files

- Type:

- Default value:

no

Normally, pruning of Files from the Catalog is specified on a Client by Client basis in

Auto Prune (Dir->Client). If this directive is specified and the value is yes, it will override the value specified in the Client resource.

- Prune Jobs

- Type:

- Default value:

no

Normally, pruning of Jobs from the Catalog is specified on a Client by Client basis in

Auto Prune (Dir->Client). If this directive is specified and the value is yes, it will override the value specified in the Client resource.

- Prune Volumes

- Type:

- Default value:

no

Normally, pruning of Volumes from the Catalog is specified on a Pool by Pool basis in

Auto Prune (Dir->Pool)directive. Note, this is different from File and Job pruning which is done on a Client by Client basis. If this directive is specified and the value is yes, it will override the value specified in the Pool resource.

- Purge Migration Job

- Type:

- Default value:

no

This directive may be added to the Migration Job definition in the Director configuration file to purge the job migrated at the end of a migration.

- Regex Where

- Type:

This directive applies only to a Restore job and specifies a regex filename manipulation of all files being restored. This will use File Relocation feature.

For more informations about how use this option, see RegexWhere Format.

- Replace

- Type:

- Default value:

Always

This directive applies only to a Restore job and specifies what happens when Bareos wants to restore a file or directory that already exists. You have the following options for replace-option:

- always

when the file to be restored already exists, it is deleted and then replaced by the copy that was backed up. This is the default value.

- ifnewer

if the backed up file (on tape) is newer than the existing file, the existing file is deleted and replaced by the back up.

- ifolder

if the backed up file (on tape) is older than the existing file, the existing file is deleted and replaced by the back up.

- never

if the backed up file already exists, Bareos skips restoring this file.

- Rerun Failed Levels

- Type:

- Default value:

no

If this directive is set to yes (default no), and Bareos detects that a previous job at a higher level (i.e. Full or Differential) has failed, the current job level will be upgraded to the higher level. This is particularly useful for Laptops where they may often be unreachable, and if a prior Full save has failed, you wish the very next backup to be a Full save rather than whatever level it is started as.

There are several points that must be taken into account when using this directive: first, a failed job is defined as one that has not terminated normally, which includes any running job of the same name (you need to ensure that two jobs of the same name do not run simultaneously); secondly, the

Ignore File Set Changes (Dir->Fileset)directive is not considered when checking for failed levels, which means that any FileSet change will trigger a rerun.

- Reschedule Interval

- Type:

- Default value:

1800

If you have specified Reschedule On Error = yes and the job terminates in error, it will be rescheduled after the interval of time specified by time-specification. See the time specification formats of

TIMEfor details of time specifications. If no interval is specified, the job will not be rescheduled on error.

- Reschedule On Error

- Type:

- Default value:

no

If this directive is enabled, and the job terminates in error, the job will be rescheduled as determined by the

Reschedule Interval (Dir->Job)andReschedule Times (Dir->Job)directives. If you cancel the job, it will not be rescheduled.This specification can be useful for portables, laptops, or other machines that are not always connected to the network or switched on.

- Reschedule Times

- Type:

- Default value:

5

This directive specifies the maximum number of times to reschedule the job. If it is set to zero the job will be rescheduled an indefinite number of times.

- Run

- Type:

The Run directive (not to be confused with the Run option in a Schedule) allows you to start other jobs or to clone the current jobs.

The part after the equal sign must be enclosed in double quotes, and can contain any string or set of options (overrides) that you can specify when entering the run command from the console. For example storage=DDS-4 …. In addition, there are two special keywords that permit you to clone the current job. They are level=%l and since=%s. The %l in the level keyword permits entering the actual level of the current job and the %s in the since keyword permits putting the same time for comparison as used on the current job. Note, in the case of the since keyword, the %s must be enclosed in double quotes, and thus they must be preceded by a backslash since they are already inside quotes. For example:

A cloned job will not start additional clones, so it is not possible to recurse.

Jobs started by

Run (Dir->Job)are submitted for running before the original job (while it is being initialized). This means that any clone job will actually start before the original job, and may even block the original job from starting. It evens ignoresPriority (Dir->Job).If you are trying to prioritize jobs, you will find it much easier to do using a

Run Script (Dir->Job)resource or aRun Before Job (Dir->Job)directive.

- Run After Failed Job

- Type:

This is a shortcut for the

Run Script (Dir->Job)resource, that runs a command after a failed job.If the exit code of the program run is non-zero, Bareos will print a warning message.

- Run After Job

- Type:

This is a shortcut for the

Run Script (Dir->Job)resource, that runs a command after a successful job (without error or without being canceled).If the exit code of the program run is non-zero, Bareos will print a warning message.

- Run Before Job

- Type:

This is a shortcut for the

Run Script (Dir->Job)resource, that runs a command before a job.If the exit code of the program run is non-zero, the current Bareos job will be canceled.

is equivalent to:

- Run On Incoming Connect Interval

- Type:

- Default value:

0

- Since Version:

19.2.4

The interval specifies the time between the most recent successful backup (counting from start time) and the event of a client initiated connection. When this interval is exceeded the job is started automatically.

Timing example for Run On Incoming Connect Interval

- Run Script

- Type:

The RunScript directive behaves like a resource in that it requires opening and closing braces around a number of directives that make up the body of the runscript.

Command options specifies commands to run as an external program prior or after the current job.

Console options are special commands that are sent to the Bareos Director instead of the OS. Console command outputs are redirected to log with the jobid 0.

You can use following console command:

delete,disable,enable,estimate,list,llist,memory,prune,purge,release,reload,status,setdebug,show,time,trace,update,version,whoami,.client,.jobs,.pool,.storage. See Bareos Console for more information. You need to specify needed information on command line, nothing will be prompted. Example:You can specify more than one Command/Console option per RunScript.

You can use following options may be specified in the body of the RunScript:

Options

Value

Description

Runs On Success

Yes | No

run if JobStatus is successful

Runs On Failure

Yes | No

run if JobStatus isn’t successful

Runs On Client

Yes | No

run a command on client (only for external commands - not console commands)

Runs When

Never |

Before|After|Always|AfterVSSWhen to run

Fail Job On Error

Yes | No

Fail job if script returns something different from 0

Command

External command (optional)

Console

Console command (optional)

Any output sent by the command to standard output will be included in the Bareos job report. The command string must be a valid program name or name of a shell script.

RunScript commands that are configured to run “before” a job, are also executed before the device reservation.

Warning

The command string is parsed then fed to the OS, which means that the path will be searched to execute your specified command, but there is no shell interpretation. As a consequence, if you invoke complicated commands or want any shell features such as redirection or piping, you must call a shell script and do it inside that script. Alternatively, it is possible to use sh -c '...' in the command definition to force shell interpretation, see example below.

Before executing the specified command, Bareos performs character substitution of the following characters:

%%

%

%b

Job Bytes

%B

Job Bytes in human readable format

%c

Client’s name

%d

Daemon’s name (Such as host-dir or host-fd)

%D

Director’s name (also valid on a Bareos File Daemon)

%e

Job Exit Status

%f

Job FileSet (only on director side)

%F

Job Files

%h

Client address

%i

Job Id

%j

Unique Job Id

%l

Job Level

%n

Job name

%p

Pool name (only on director side)

%P

Daemon PID

%s

Since time

%t

Job type (Backup, …)

%v

Read Volume name(s) (only on director side)

%V

Write Volume name(s) (only on director side)

%w

Storage name (only on director side)

%x

Spooling enabled? (“yes” or “no”)

Some character substitutions are not available in all situations.

The Job Exit Status code %e edits the following values:

OK

Error

Fatal Error

Canceled

Differences

Unknown term code

Thus if you edit it on a command line, you will need to enclose it within some sort of quotes.

You can use these following shortcuts:

Keyword

RunsOnSuccess

RunsOnFailure

FailJobOnError

Runs On Client

RunsWhen

Yes

No

Before

Yes

No

No

After

No

Yes

No

After

Yes

Yes

Before

Yes

No

Yes

After

Examples:

Special Windows Considerations

You can run scripts just after snapshots initializations with AfterVSS keyword.

In addition, for a Windows client, please take note that you must ensure a correct path to your script. The script or program can be a .com, .exe or a .bat file. If you just put the program name in then Bareos will search using the same rules that cmd.exe uses (current directory, Bareos bin directory, and PATH). It will even try the different extensions in the same order as cmd.exe. The command can be anything that cmd.exe or command.com will recognize as an executable file.

However, if you have slashes in the program name then Bareos figures you are fully specifying the name, so you must also explicitly add the three character extension.

The command is run in a Win32 environment, so Unix like commands will not work unless you have installed and properly configured Cygwin in addition to and separately from Bareos.

The System %Path% will be searched for the command. (under the environment variable dialog you have have both System Environment and User Environment, we believe that only the System environment will be available to bareos-fd, if it is running as a service.)

System environment variables can be referenced with %var% and used as either part of the command name or arguments.

So if you have a script in the Bareos bin directory then the following lines should work fine:

The outer set of quotes is removed when the configuration file is parsed. You need to escape the inner quotes so that they are there when the code that parses the command line for execution runs so it can tell what the program name is.

The special characters

&<>()@^|will need to be quoted, if they are part of a filename or argument.If someone is logged in, a blank “command” window running the commands will be present during the execution of the command.

Some Suggestions from Phil Stracchino for running on Win32 machines with the native Win32 Bareos File Daemon:

You might want the ClientRunBeforeJob directive to specify a .bat file which runs the actual client-side commands, rather than trying to run (for example) regedit /e directly.

The batch file should explicitly ’exit 0’ on successful completion.

The path to the batch file should be specified in Unix form:

Client Run Before Job = "c:/bareos/bin/systemstate.bat"rather than DOS/Windows form:

INCORRECT:

Client Run Before Job = "c:\bareos\bin\systemstate.bat"

For Win32, please note that there are certain limitations:

Client Run Before Job = "C:/Program Files/Bareos/bin/pre-exec.bat"Lines like the above do not work because there are limitations of cmd.exe that is used to execute the command. Bareos prefixes the string you supply with cmd.exe /c. To test that your command works you should type cmd /c "C:/Program Files/test.exe" at a cmd prompt and see what happens. Once the command is correct insert a backslash () before each double quote (“), and then put quotes around the whole thing when putting it in the Bareos Director configuration file. You either need to have only one set of quotes or else use the short name and don’t put quotes around the command path.

Below is the output from cmd’s help as it relates to the command line passed to the /c option.

If /C or /K is specified, then the remainder of the command line after the switch is processed as a command line, where the following logic is used to process quote (”) characters:

If all of the following conditions are met, then quote characters on the command line are preserved:

no /S switch.

exactly two quote characters.

no special characters between the two quote characters, where special is one of:

&<>()@^|there are one or more whitespace characters between the the two quote characters.

the string between the two quote characters is the name of an executable file.

Otherwise, old behavior is to see if the first character is a quote character and if so, strip the leading character and remove the last quote character on the command line, preserving any text after the last quote character.

- Save File History

- Type:

- Default value:

yes

- Since Version:

14.2.0

Allow disabling storing the file history, as this causes problems problems with some implementations of NDMP (out-of-order metadata).

With

File History Size (Dir->Job)the maximum number of files and directories inside a NDMP job can be configured.Warning

The File History is required to do a single file restore from NDMP backups. With this disabled, only full restores are possible.

- Schedule

- Type:

The Schedule directive defines what schedule is to be used for the Job. The schedule in turn determines when the Job will be automatically started and what Job level (i.e. Full, Incremental, …) is to be run. This directive is optional, and if left out, the Job can only be started manually using the Console program. Although you may specify only a single Schedule resource for any one job, the Schedule resource may contain multiple Run directives, which allow you to run the Job at many different times, and each run directive permits overriding the default Job Level Pool, Storage, and Messages resources. This gives considerable flexibility in what can be done with a single Job. For additional details, see Schedule Resource.

- SD Plugin Options

- Type:

These settings are plugin specific, see Storage Daemon Plugins.

- Selection Pattern

- Type:

Selection Patterns is only used for Copy and Migration jobs, see Migration and Copy. The interpretation of its value depends on the selected

Selection Type (Dir->Job).For the OldestVolume and SmallestVolume, this Selection pattern is not used (ignored).

For the Client, Volume, and Job keywords, this pattern must be a valid regular expression that will filter the appropriate item names found in the Pool.

For the SQLQuery keyword, this pattern must be a valid SELECT SQL statement that returns JobIds.

Example:

- Selection Type

- Type:

Selection Type is only used for Copy and Migration jobs, see Migration and Copy. It determines how a migration job will go about selecting what JobIds to migrate. In most cases, it is used in conjunction with a

Selection Pattern (Dir->Job)to give you fine control over exactly what JobIds are selected. The possible values are:- SmallestVolume

This selection keyword selects the volume with the fewest bytes from the Pool to be migrated. The Pool to be migrated is the Pool defined in the Migration Job resource. The migration control job will then start and run one migration backup job for each of the Jobs found on this Volume. The Selection Pattern, if specified, is not used.

- OldestVolume

This selection keyword selects the volume with the oldest last write time in the Pool to be migrated. The Pool to be migrated is the Pool defined in the Migration Job resource. The migration control job will then start and run one migration backup job for each of the Jobs found on this Volume. The Selection Pattern, if specified, is not used.

- Client

The Client selection type, first selects all the Clients that have been backed up in the Pool specified by the Migration Job resource, then it applies the

Selection Pattern (Dir->Job)as a regular expression to the list of Client names, giving a filtered Client name list. All jobs that were backed up for those filtered (regexed) Clients will be migrated. The migration control job will then start and run one migration backup job for each of the JobIds found for those filtered Clients.- Volume

The Volume selection type, first selects all the Volumes that have been backed up in the Pool specified by the Migration Job resource, then it applies the

Selection Pattern (Dir->Job)as a regular expression to the list of Volume names, giving a filtered Volume list. All JobIds that were backed up for those filtered (regexed) Volumes will be migrated. The migration control job will then start and run one migration backup job for each of the JobIds found on those filtered Volumes.- Job

The Job selection type, first selects all the Jobs (as defined on the

Name (Dir->Job)directive in a Job resource) that have been backed up in the Pool specified by the Migration Job resource, then it applies theSelection Pattern (Dir->Job)as a regular expression to the list of Job names, giving a filtered Job name list. All JobIds that were run for those filtered (regexed) Job names will be migrated. Note, for a given Job named, they can be many jobs (JobIds) that ran. The migration control job will then start and run one migration backup job for each of the Jobs found.- SQLQuery

The SQLQuery selection type, used the

Selection Pattern (Dir->Job)as an SQL query to obtain the JobIds to be migrated. The Selection Pattern must be a valid SELECT SQL statement for your SQL engine, and it must return the JobId as the first field of the SELECT.- PoolOccupancy

This selection type will cause the Migration job to compute the total size of the specified pool for all Media Types combined. If it exceeds the

Migration High Bytes (Dir->Pool)defined in the Pool, the Migration job will migrate all JobIds beginning with the oldest Volume in the pool (determined by Last Write time) until the Pool bytes drop below theMigration Low Bytes (Dir->Pool)defined in the Pool. This calculation should be consider rather approximative because it is made once by the Migration job before migration is begun, and thus does not take into account additional data written into the Pool during the migration. In addition, the calculation of the total Pool byte size is based on the Volume bytes saved in the Volume (Media) database entries. The bytes calculate for Migration is based on the value stored in the Job records of the Jobs to be migrated. These do not include the Storage daemon overhead as is in the total Pool size. As a consequence, normally, the migration will migrate more bytes than strictly necessary.- PoolTime

The PoolTime selection type will cause the Migration job to look at the time each JobId has been in the Pool since the job ended. All Jobs in the Pool longer than the time specified on

Migration Time (Dir->Pool)directive in the Pool resource will be migrated.- PoolUncopiedJobs

This selection which copies all jobs from a pool to an other pool which were not copied before is available only for copy Jobs.

- Spool Attributes

- Type:

- Default value:

no

Is Spool Attributes is disabled, the File attributes are sent by the Storage daemon to the Director as they are stored on tape. However, if you want to avoid the possibility that database updates will slow down writing to the tape, you may want to set the value to yes, in which case the Storage daemon will buffer the File attributes and Storage coordinates to a temporary file in the Working Directory, then when writing the Job data to the tape is completed, the attributes and storage coordinates will be sent to the Director.

NOTE: When

Spool Data (Dir->Job)is set to yes, Spool Attributes is also automatically set to yes.For details, see Data Spooling.

- Spool Data

- Type:

- Default value:

no

If this directive is set to yes, the Storage daemon will be requested to spool the data for this Job to disk rather than write it directly to the Volume (normally a tape).

Thus the data is written in large blocks to the Volume rather than small blocks. This directive is particularly useful when running multiple simultaneous backups to tape. Once all the data arrives or the spool files’ maximum sizes are reached, the data will be despooled and written to tape.

Spooling data prevents interleaving data from several job and reduces or eliminates tape drive stop and start commonly known as “shoe-shine”.

We don’t recommend using this option if you are writing to a disk file using this option will probably just slow down the backup jobs.

NOTE: When this directive is set to yes,

Spool Attributes (Dir->Job)is also automatically set to yes.For details, see Data Spooling.

- Spool Size

- Type:

This specifies the maximum spool size for this job. The default is taken from

Maximum Spool Size (Sd->Device)limit.

- Storage

- Type:

The Storage directive defines the name of the storage services where you want to backup the FileSet data. For additional details, see the Storage Resource of this manual. The Storage resource may also be specified in the Job’s Pool resource, in which case the value in the Pool resource overrides any value in the Job. This Storage resource definition is not required by either the Job resource or in the Pool, but it must be specified in one or the other, if not an error will result.

- Strip Prefix

- Type:

This directive applies only to a Restore job and specifies a prefix to remove from the directory name of all files being restored. This will use the File Relocation feature.

Using

Strip Prefix=/etc,/etc/passwdwill be restored to/passwdUnder Windows, if you want to restore

c:/filestod:/files, you can use:

- Type

- Required:

True

- Type:

The Type directive specifies the Job type, which is one of the following:

- Backup

- Run a backup Job. Normally you will have at least one Backup job for each client you want to save. Normally, unless you turn off cataloging, most all the important statistics and data concerning files backed up will be placed in the catalog.

- Restore